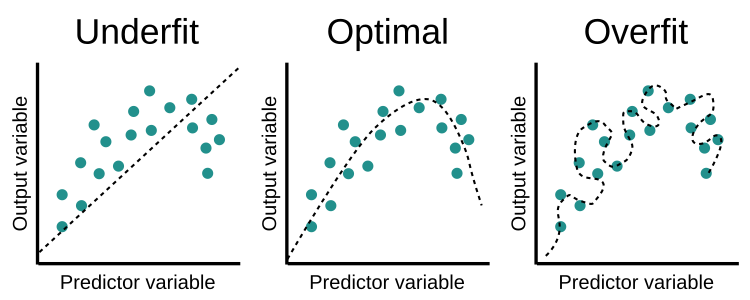

Small Alpha ==> High-grade polynomials ==> More Complex H ==> better chance of approximation F ==> Overfitting ==> Only good for training data not for estimation

Big Alpha ==> Less complex H ==> better chance of generalization out of F ==> Underfitting ==> No proper answer for training data

The correct Alpha ==> Where there is a correct balance between approximation and generalization.

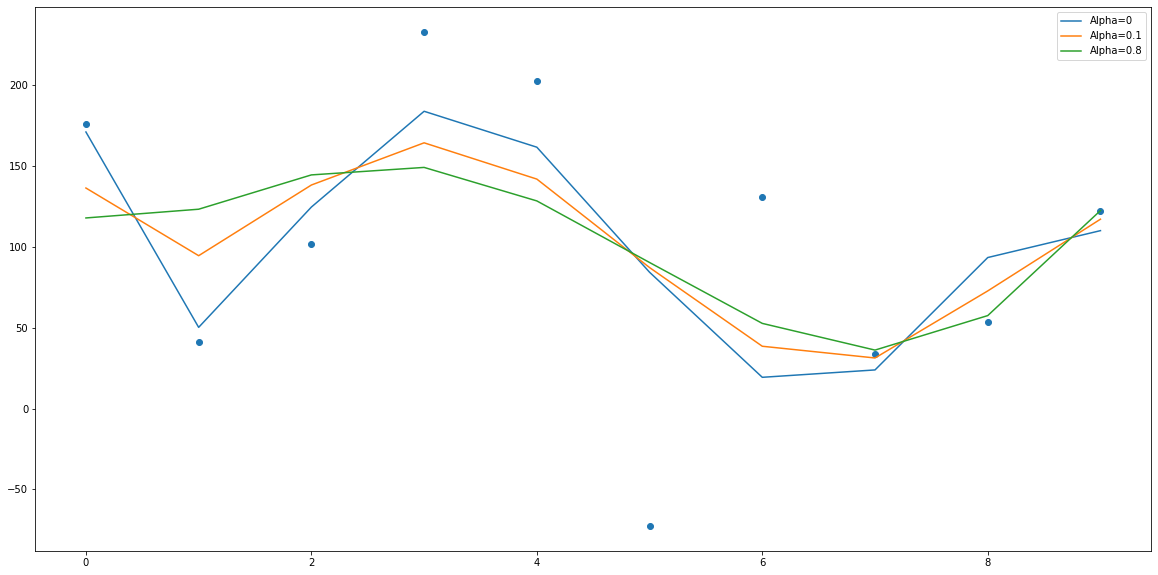

The result for attached code is as below:

As we see by increasing the alpha we have smoother line which is less fit on the train data.